Least Squares Rational Function

In my paper “Fast and Accurate Analytic Basis Point Volatility”, I use a table of Chebyshev polynomials to provide an accurate representation of some function. This is an idea I first saw in the Faddeeva package to represent the cumulative normal distribution with high accuracy, and high performance. It is also simple to find out the Chebyshev polynomials, and which intervals are the most appropriate for those, which makes this technique quite appealing.

Still, it would have been nice to have also a more visually appealing rational function representation, as W. Shaw did for the cumulative normal distribution (again). Popular algorithms to find the best rational function representation seem to be minimax or Remez. But I could not find an open-source library where those were implemented. There is an interesting implementation in chebfun but this depends on Matlab.

The Numerical recipe book provides a simple algorithm in chapter 5.13, not looking for the best possible rational function, but just for one that would be “good enough”. Interestingly, the first part of the algorithm merely computes a least squares solution on some chebyshev like nodes. I however quickly noticed funny behaviors with the code: it could produce a worse fit for a higher order numerator or denominator. Then I tried some least squares python code which ended up being just buggy: I am not sure what the code actually does with all the numpy and scipy magic, it gives solutions with poles in the data, and clearly not the least squares solution. I can’t fully understand why one would propose such a code.

It turns out that I had an alternative very basic least squares polynomial fit implementation, which is based on this matrix representation. I wondered if it would be as prone to errors as Numerical recipe code (where they use SVD internally to solve).

The answer is: it depends. It depends on the solver used. If I use a QR solver, then the implementation looks robust on my test data, much more than Numerical recipe code. If I use LU, it just fails in some cases. If I use SVD, it is sometimes better sometimes worse than Numerical recipe.

Interestingly, I thought, that, maybe, a gradient descent could allow to regain the lost accuracy with SVD, using as starting point, the SVD guess. However, it does not work, it just converges more or less to the same (bad) solution.

Another interesting point, is that the using QR decomposition (instead of SVD) in the Numerical recipe implementation resulted in a much better solution, better even than my basic least squares fit, which looked robust, but was actually not so much.

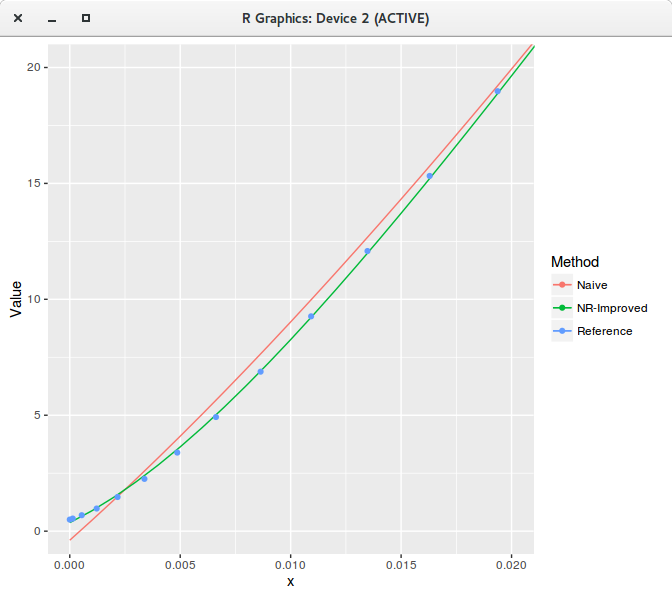

Armed with this improved Numerical recipe code (labeled NRI), here is a comparison of fit of my naive code (with QR) against the improved numerical recipe (NRI) for a polynomial of degree 20. We can visually see the difference (when we zoom)!

Least squares polynomial fit of degree 20 zoomed.

The RMSE difference on the full interval [0,1] on 1000 equidistant points is huge:

| Method | RMSE |

|---|---|

| Polynomial Naive | 0.234 |

| Polynomial NRI | 0.039 |

| Rational NRI | 0.001 |

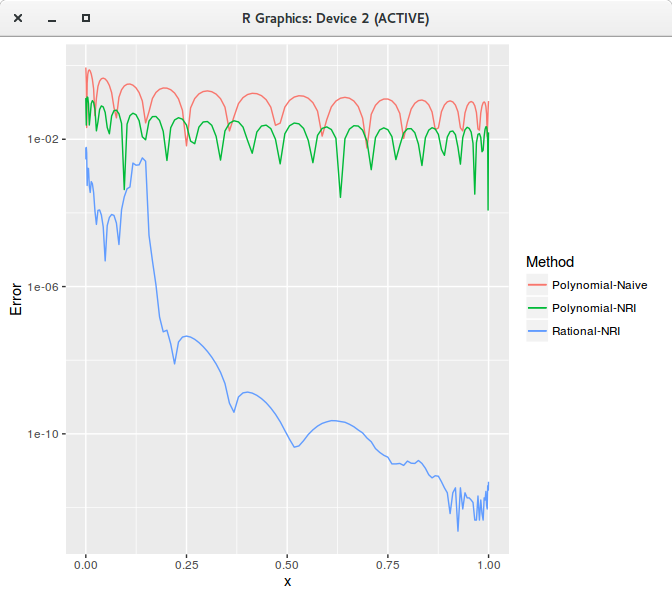

A 10/10 rational function (numerator of degree 10, denominator of degree 10) gives a much better fit than a polynomial of degree 20. It is interesting to look at the error visually to understand how large is the difference:

Least squares polynomial fit error of degree 20.

I still can draw a conclusion to my quest for a rational function approximation: I won’t find a good one with the change of variables I am using in my paper, as I would imagine the least squares solution to be at worst around one order of magnitudes away from the minimax. The errors I got suggest I would need a few rational functions, maybe 3 or more, and then it does not look all that appealing compared to the table of Chebyshev polynomials.

I thought this was a good example of how relatively simple numerical tasks can be challenging in practice.

Update - The paper now contains a solution for the normal volatility inversion problem with only 3 rational functions.